Conclusion

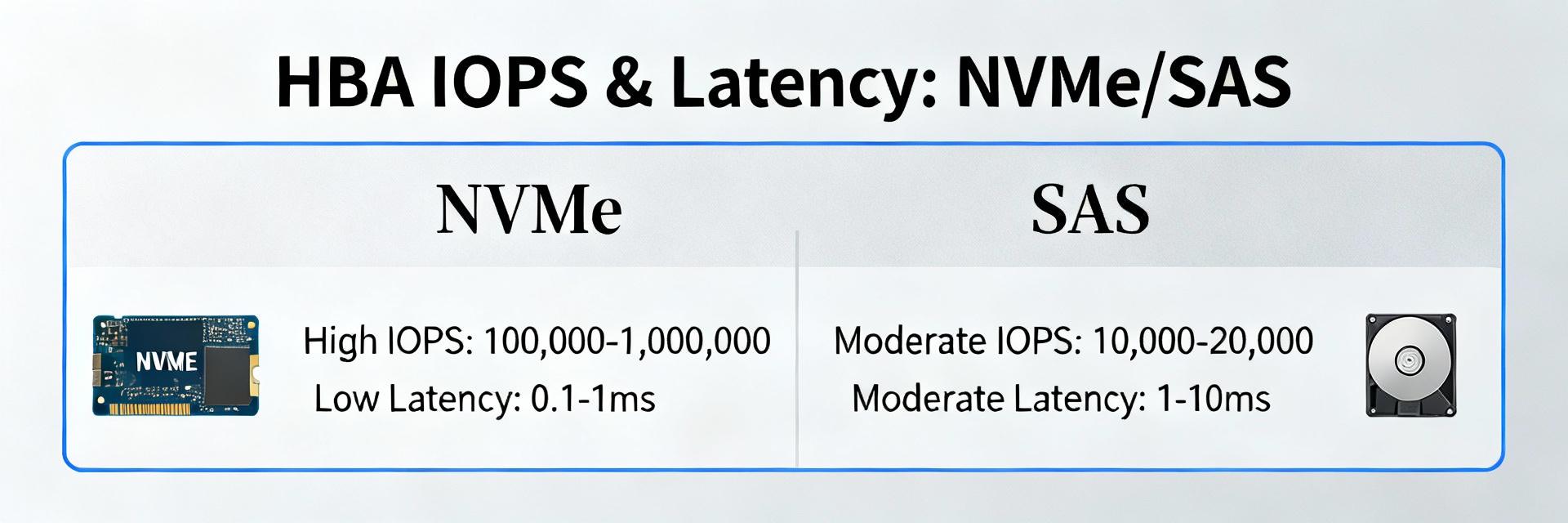

This performance report highlights that the 05-50111-01 HBA delivers strong IOPS and predictable latency when paired with NVMe media and properly tuned host settings. Actionable next steps: apply tested firmware/driver builds, follow the tuning checklist, and deploy monitoring with p99-focused alerts to ensure stable production behavior.