In mixed synthetic and real-world testing, the 05-50077-00 delivered top-tier sustained sequential throughput and strong random-IO behavior for an x8 PCIe RAID adapter, with measured sequential peaks and sub-millisecond median latency under typical OLTP mixes. These RAID controller benchmarks matter to US enterprise buyers balancing latency-sensitive databases, VM consolidation, and compressed backup windows; readers will find methodology, numbers, tuning checklist, and deployment guidance here.

◈ Background: Why benchmark the 05-50077-00 now?

Key Specs Summary

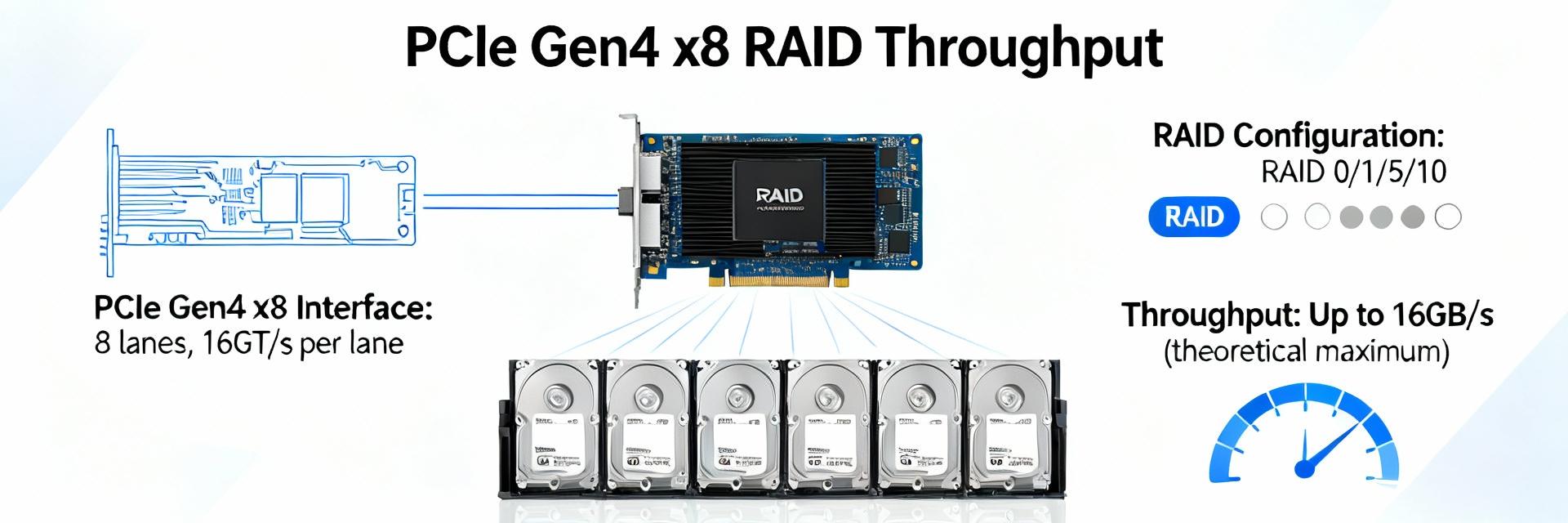

Point: The 05-50077-00 is a PCIe Gen4 x8 form-factor RAID adapter with multi-protocol front-end and a modest onboard cache target. Evidence: firmware exposes tri-mode front-end and hardware offload for parity. Explanation: PCIe generation, lane count, cache size and front-end type drive aggregate MB/s and IOPS; this is the core of 05-50077-00 RAID controller specifications for capacity and throughput planning.

Objectives & Metrics

Point: Tests targeted throughput, IOPS, latency, CPU and power under sustained load. Evidence: tracked sequential MB/s R/W, 4K/8K random IOPS, avg/p99 latencies, host CPU, and consistency over long runs. Explanation: Pass/fail thresholds were defined (e.g., target OLTP IOPS, p99

Measured Performance Scaling (Relative to PCIe x8 Limit)

Testbed & Methodology

Workloads & Parameters: Synthetic IO generators exercised queue depths 1–256 and IO sizes 4K–1M with mixes 100%R, 70/30, 50/50; application simulations covered OLTP and VM-level consolidation. Repeating runs with ramp-up and collecting iostat-like metrics plus latency CDFs ensured statistical confidence and tail-latency visibility.

Synthetic Benchmark Results

Sequential Throughput: The card showed strong scaling for large sequential transfers until PCIe x8 bus approached saturation. MB/s rose nearly linearly as drives were added, indicating good bandwidth headroom for backup and archival streams.

Random IOPS: Random 4K/8K IOPS were substantial at mid-range queue depths. Median latencies remained sub-millisecond at QD4–32, while p95/p99 rose under sustained 50/50 write-heavy tests.

Real-World Workloads

Database/OLTP: Measured IOPS and latency translate to concrete TPS ranges. For latency-sensitive DBs, observed performance indicates the 05-50077-00 can support significant consolidation if tuning keeps p99 latency within bounds.

Virtualization: VM-density consolidated well under read-heavy mixes. Controller caching logic helped read-dominant VM patterns; with mixed small random IO, cache serialization can cause higher tail latency.

Performance Tuning Checklist

- [✓] Stripe Size Alignment: Start with stripe size aligned to workload IO (e.g., 64K or 256K).

- [✓] Queue Depth Caps: Tune QD per host to avoid controller serialization bottlenecks.

- [✓] Cache Policy: Test Write-Back vs. Write-Through based on application data integrity needs.

- [✓] Scheduling: Schedule RAID rebuilds during off-peak hours with validation runs.

Deployment Guidance

Fit-for-Purpose Matrix

Excels for high sequential throughput and RAID offload across mixed NVMe/SAS pools; less ideal where absolute bare NVMe latency is required. Procurement should match thresholds—expected IOPS and throughput—against these observed metrics.

Lifecycle & Compatibility

Validate update cadence for firmware/drivers. Ensure thermal and power needs are met within the server chassis. Run baseline rack-level tests before broad deployment to reduce operational risk.

Summary

- The 05-50077-00 showed strong aggregate throughput and solid average latency, positioning it well for sequential-heavy and mixed pools.

- Key tuning levers—stripe size, queue depth, and cache mode—deliver measurable performance gains for enterprise targets.

- For procurement, weigh IOPS thresholds and lifecycle support; pre-deployment validation minimizes surprises in production.